PRESENTATION DECK:

https://docs.google.com/presentation/d/1cPPR8hYR3b0oAD_mSMG24I5TcbNT8Zs0gJDa2Jc24jA/edit?usp=sharing

NON-HUMAN SONGS FROM POD SCAPE:

POD GUIDE:

DESCRIPTION:

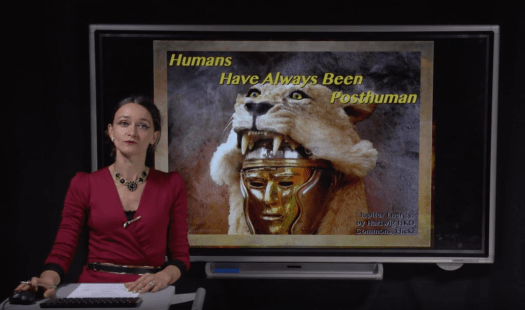

I first became interested in exploring what “Posthuman” was about a year before I came to ITP. The name is evocative, sounds paradoxical and kind of annoying. Once I started my research I became more confused before getting a firmer understanding that “Posthumanism” & “Transhumanism” are not the same thing and sometimes get used interchangeably. I wish I came across Cary Wolfe’s book earlier, where Donna Haraway praises him for making clear what Posthumanism is. But I think it was important for me to tread through the confusion like I’m sure many do, when approaching this area of thought.

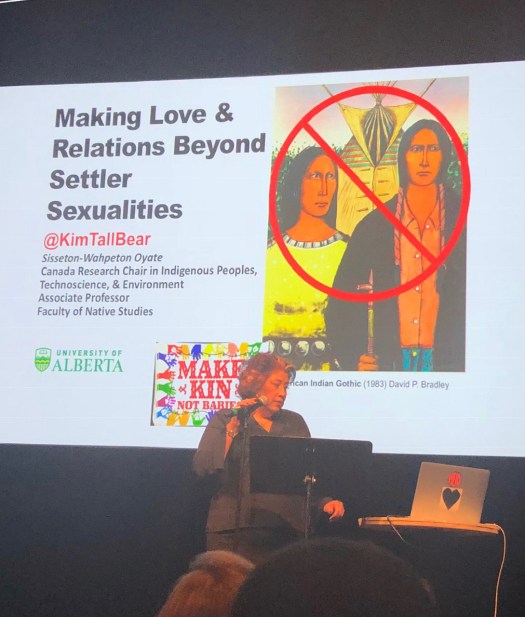

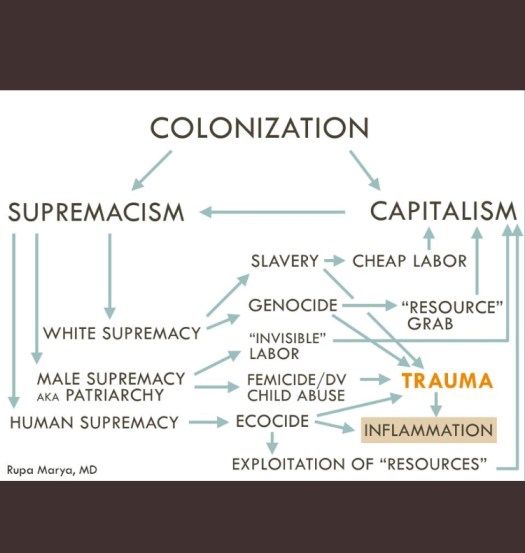

“Tranhumanism” is the enhancement of humans through technology, also call “H+” and supports anthropomorphism. My working definition of “Posthumanism” is: Disrupting ideas of what humanism means in western culture for about 500 years and how that also affected relation to nonhuman entities. Critical race theory, postcolonial, gender, queer theory have already been doing this, along with human de-centering ideas of theorists in last century. Some artists, certain communities and individuals have been creating this shift for years. Not a shift to only nonhuman forms but including an embrace of multiple perspectives that include non-human beings; creatures and ghosts / spirituality. Attempt to approach post-duality. The technological extension of humans and other species in Posthuman theory, is relevant within Posthumanism, since it shapes our consciousness and effects our bodies, and is now its own entity in the world.

I’m drawn to this idea of a word that attempts to hold space for for non-dominant voices figuratively and literally, and this area of study is vast. In my research I zeroed in on the more metaphysical aspects of this exploration; healing, exploring temporalities through speculative narratives and poetry rituals, and how these ideas are put into practice by various artists. Underlying all of my work is healing – and healing is ultimately space-time travel, we can access what we are healing from, individually, through visualizing and active engagement with the nonlinear, and get in touch with the physicality of the past living in our bodies/nervous system and our minds, and non-direct physicality through resonance. We can’t time travel on the linear aspect of our experience but we can engage in nonlinear spacetimemattering through active presence and engagement from our position in linear experience/perspective. Engaging with our individual and collective ghosts, or energetic imprints or the lack of something. Building on & appreciating established global traditions, how can we explore new ways?

I think the powerful ability of poetry to hold the most painful experiences & find beauty in perceived hell & its ability to to time travel, like healing and sci-fi / speculative fabulation, is central to this piece. The power of sci-fi to write stories & new possibilities into existence and its wide holding space to address the intra-connection of life and systems, is what has always drawn me to it as a comforting (not only in a utopic sense but as a space holding), hopeful & exciting beacon, and as a potential mystical act.

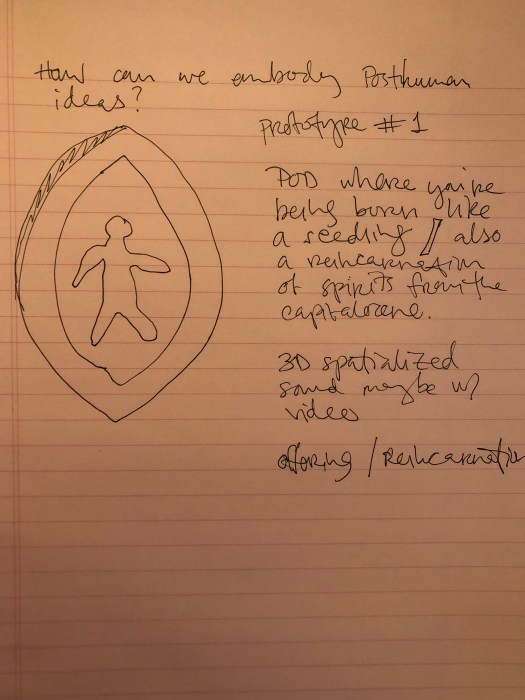

The accompanying art project will be ongoing and is a song book – songs and tracks with different chapters, one chapter I finished is about songs from non-human perspectives and another is poetry rituals from the future (will be part of future creation). The song book will be set inside a pod system which are the gestation period of various ghosts of the anthropocene (people, animals, plants etc). In order for them to reincarnate physically further or not (one is not superior to the other in this setting), the person interacting must hold space for their pod song/recording/story. The pods might be virtual or a physical installation.

RESOURCE LIST:

Online Resources:

https://www.are.na/nire-erin/posthuman-ism-exploration

Books:

(will have this in completion tomorrow)

FINAL REFLECTION:

It’s been really beautiful process researching these ideas and putting them into practice, specifically during the 7 day practice, making songs and imagining myself embodying the pod guide and thinking of FAQs and making answers to them.

I cried about once a week, (which made me think I was on the right path for me, right now), reading about all different aspects of Posthumanism and looking for artists that are speaking non-dominant voices into existence, in various ways. Becoming intimate with an idea(s) and thinking about where my personal inquiry lies within that frame, made me feel like I was connecting with it energetically through attention and resonance – people, plants, animals, fungus, things. It felt comforting to find so many people who are dedicated to untangling dominant ideas that are oppressive and destructive – macro paradigms all the way to quantum physics to ghosts. It’s also lead me to speak with people in person that was really fulfilling and grounding and felt very supportive.

I would love to explore this project further possibly in a physical installation of the pod scape. I think building a performance with the system would be interesting. I’d like to ask various people to make their own pod story, so that there are various downloads.